Compositional Text-to-Image Generation with Dense Blob Representations

Abstract

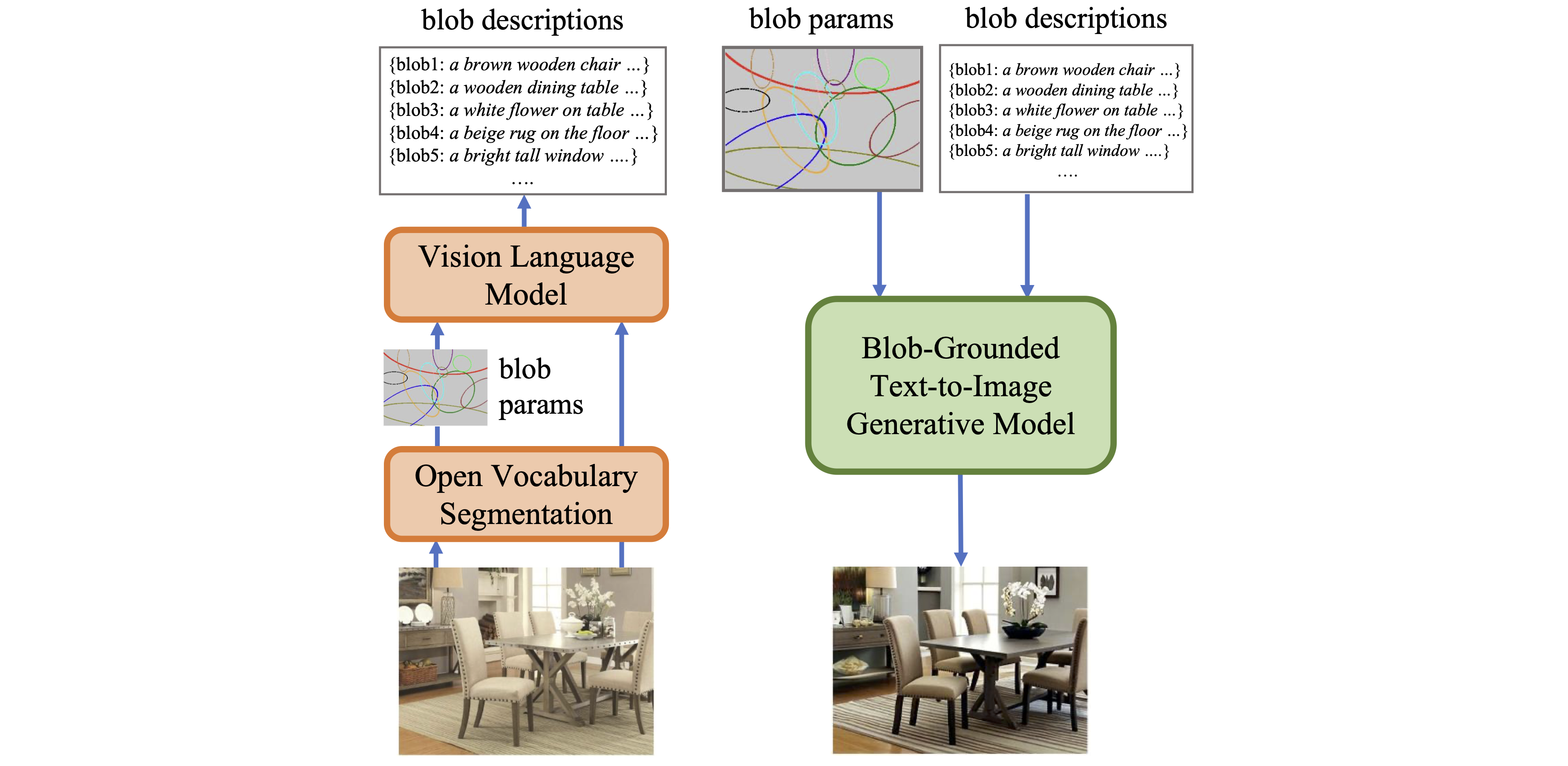

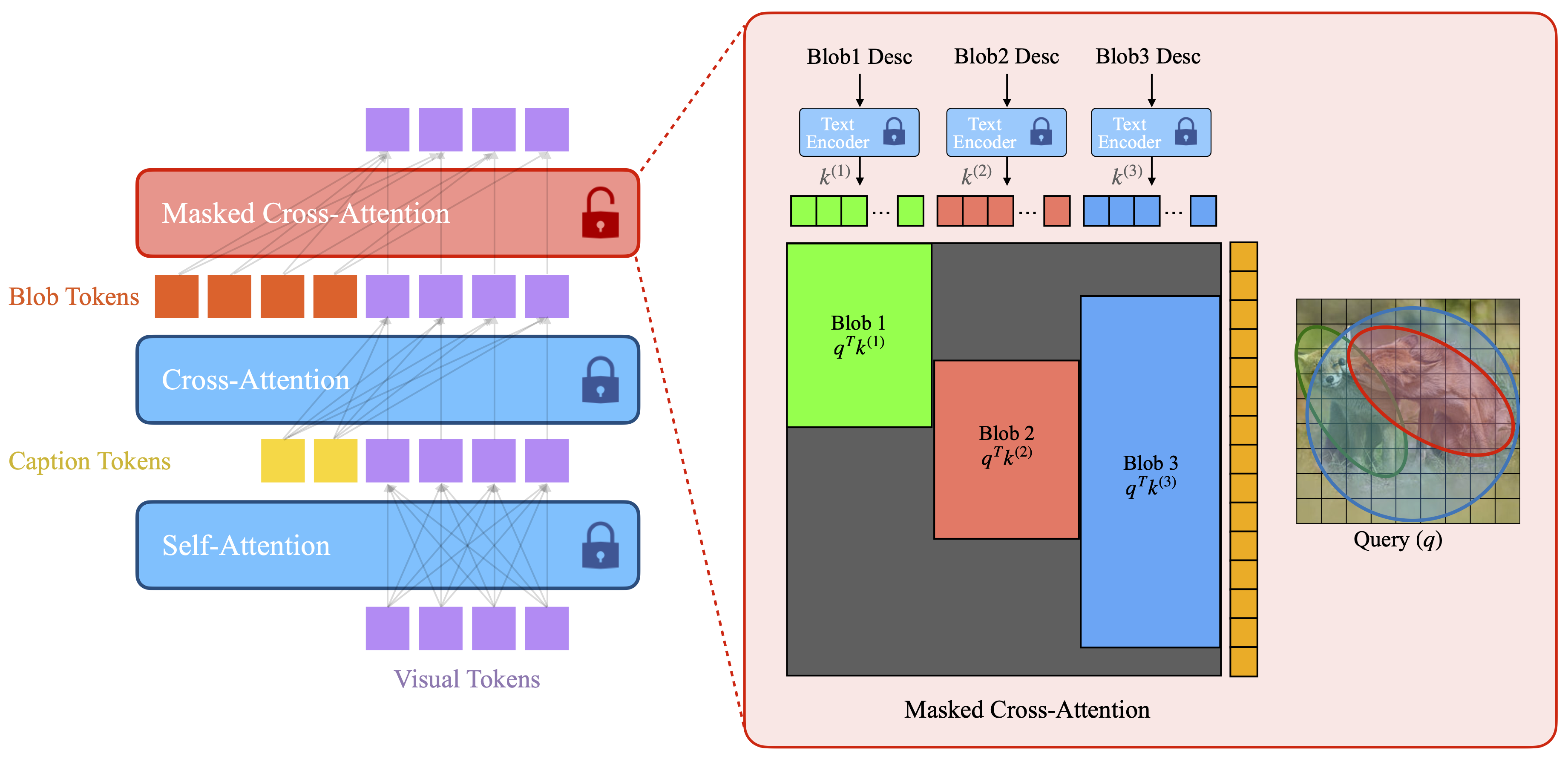

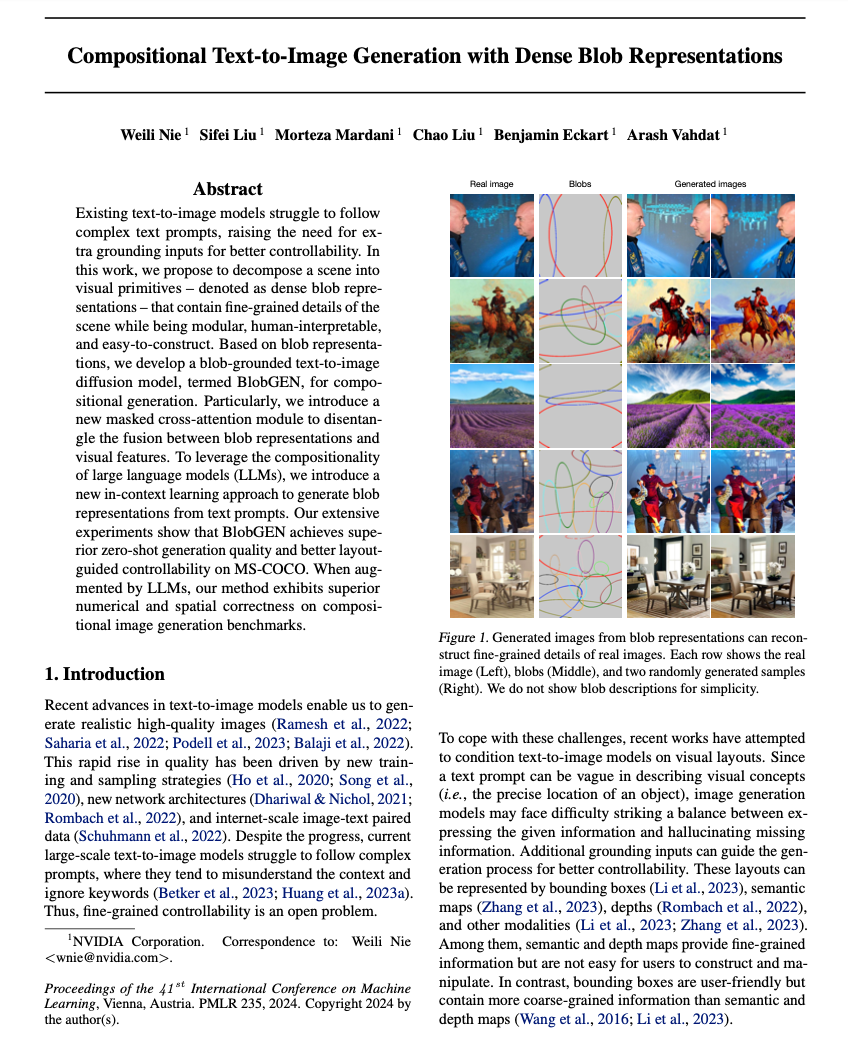

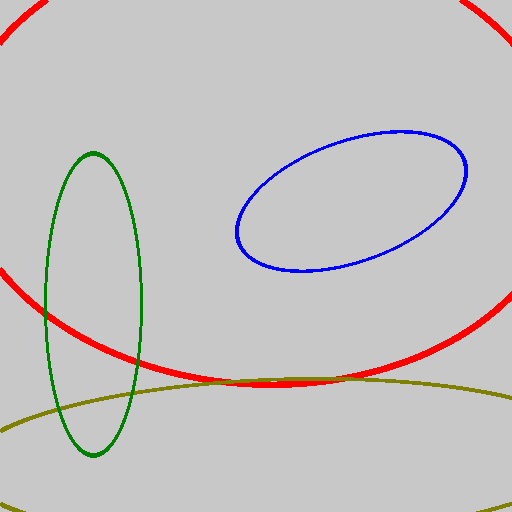

Existing text-to-image models struggle to follow complex text prompts, raising the need for extra grounding inputs for better controllability. In this work, we propose to decompose a scene into visual primitives - denoted as dense blob representations - that contain fine-grained details of the scene while being modular, human-interpretable, and easy-to-construct. Based on blob representations, we develop a blob-grounded text-to-image diffusion model, termed BlobGEN, for compositional generation. Particularly, we introduce a new masked cross-attention module to disentangle the fusion between blob representations and visual features. To leverage the compositionality of large language models (LLMs), we introduce a new in-context learning approach to generate blob representations from text prompts. Our extensive experiments show that BlobGEN achieves superior zero-shot generation quality and better layout-guided controllability on MS-COCO. When augmented by LLMs, our method exhibits superior numerical and spatial correctness on compositional image generation benchmarks.

|

|

|

|

|

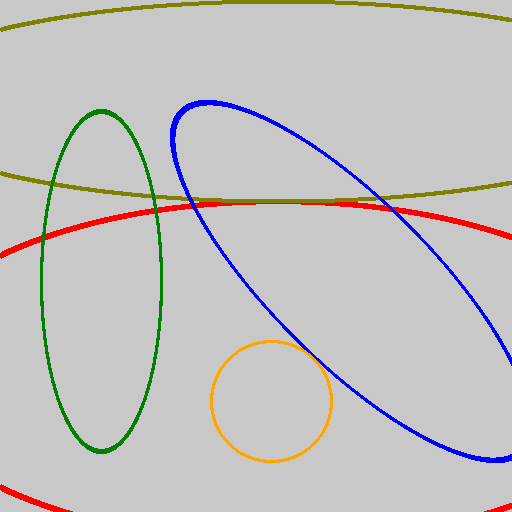

Caption: "Two astronauts in a grassy field with trees in the background." Blob 1: "The tree is green and leafy, with a lush and healthy appearance." Blob 2: "The grass is tall and lush, with a mix of green and brown colors." Blob 3: "The astronaut wears a white space suit and a large glass helmet, floating in the air with his body stretched." Blob 4: "The astronaut appears to be walking in a wooded area, surrounded by trees. The astronaut wears a white space suit and a large glass helmet." |

Caption: "A robot is playing a soccer ball with a giraffe on a beach." Blob 1: "The sky is light blue with a few white clouds." Blob 2: "The beach is full of golden sands that meet the azure sea waters." Blob 3: "The robot is a humanoid robot with a white body and two black eyes." Blob 4: "The ball is a pink soccer ball designed for professional use." Blob 5: "The giraffe is tall and brown, with a long neck and distinctive spotted coat." |

|

|

|

|

|

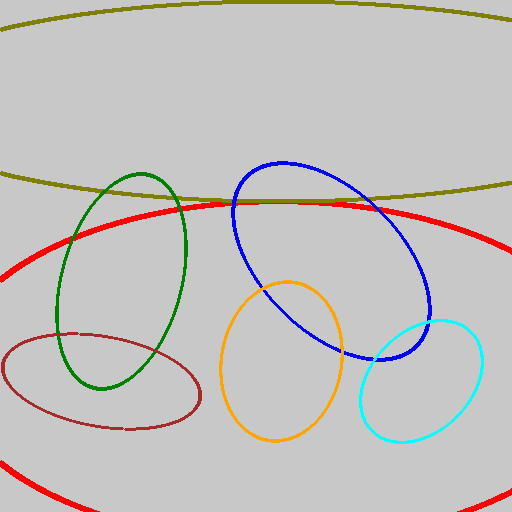

Caption: "A dog who is a doctor is talking to a cat who is the dog doctor's patient." Blob 1: "The wall is in light gray color with a landscape painting on it." Blob 2: "The floor is light brown and appears to be made of hardwood. It has a natural and warm appearance and is large and spacious." Blob 3: "The cat is in gray color with a fat and fluffy body. It is sitting on a bench, listening the instructions of the dog doctor." Blob 4: "The bench is in black color with a leather texture." Blob 5: "The desk is in light blue color. It appears to be a classic work desk." Blob 6: "The dog has a brown head and dresses a white doctor uniform. It is sitting on a chair, talking to the cat." Blob 7: "The chair is a tan-colored leather chair, featuring a stitched design." |

Caption: "Two raccoons walk under a drizzly rain, and each of them holds a umbrella." Blob 1: "The sky is in a dark grey color with heavy clouds hanging low and a drizzly rain in the background." Blob 2: "The building stands tall like proud sentinels, their steel and glass facades reflecting the city's vibrant energy." Blob 3: "The street is wet and sandy, with a black and white color scheme." Blob 4: "The raccoon has a pointed snout, a mask-like pattern of black fur around its eyes. Its body is stout and compact with grayish-brown fur." Blob 5: "The umbrella is red and its style is a traditional, collapsible design." Blob 6: "The raccoon has a pointed snout, a mask-like pattern of black fur around its eyes. Its body is stout and compact with grayish-brown fur." Blob 7: "The umbrella is blue and its style is a traditional, collapsible design." |

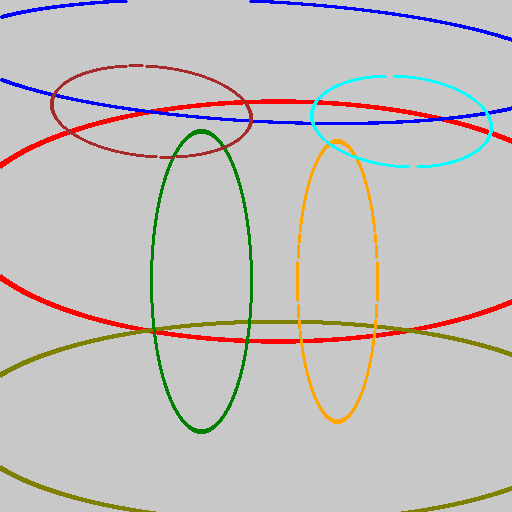

Image generation from user-constructed blob representations (blob parameters denoted as different ellipses and blob descriptions denoted by text sentences) along with the global caption. From left to right in each example, we show the blob ellipses, the generated image superimposed by blobs, and the generated image itself, respectively, and we also show the global caption and each blob description in the below. We vary the random seed for diverse outputs while all follow the input blob representations.

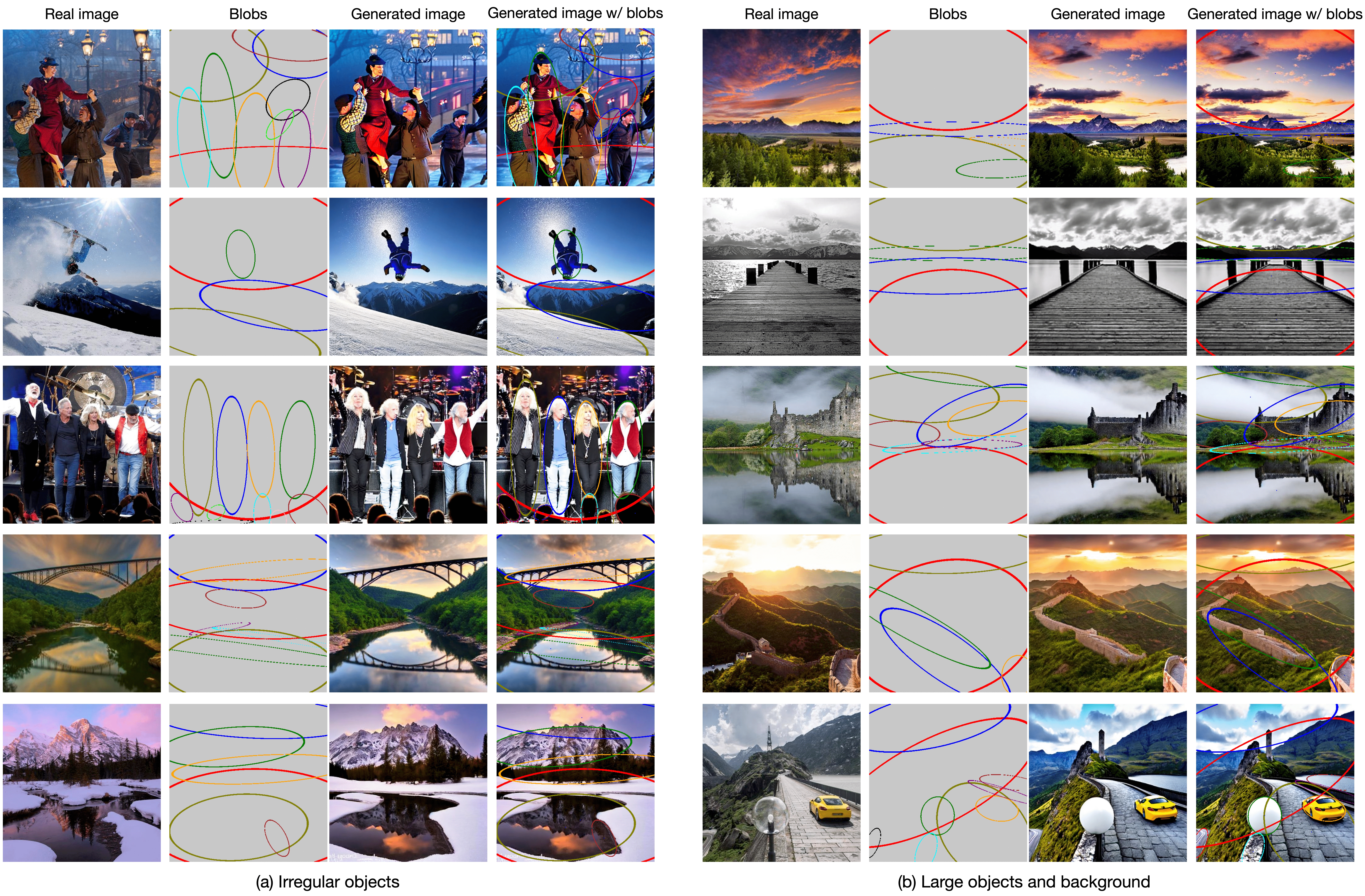

Blob representations capture fine-grained details of real images

We show visual examples of how blob-grounded generation (BlobGEN) can capture fine-grained details of real images, in particular those with irregular objects, large objects and background. For example, the first three examples in (a) show that blob representations can capture "a person with waving hands outside the blob". the last two examples in (a) show that blob representations can capture "a river with irregular shapes". Also, the fourth example in (b) also shows that blob representations can capture "the great wall with a zigzag shape". As for large objects and background, the first two rows in (b) show that blob representations can capture "sky" with the similar color and mood. The second row in (b) show that blob representations can capture the large "pier" with a similar color, pattern and shape. The third row in (b) show that blob representations can capture the large "foggy grass" and its reflection in the water. The last two rows in (b) show that blob representations can capture the large mountains in the background.

Examples of blob-grounded generation capturing (a) irregular objects (e.g. "person with waving hands" and "river"), and (b) large objects and background (e.g., "sky", "mountain" and "grass"). From left to right in each row, we show the reference real image, blobs, generated image and generated image superposed on blobs. Note that images have been compressed to save memory. Better zoom in for better visualization.

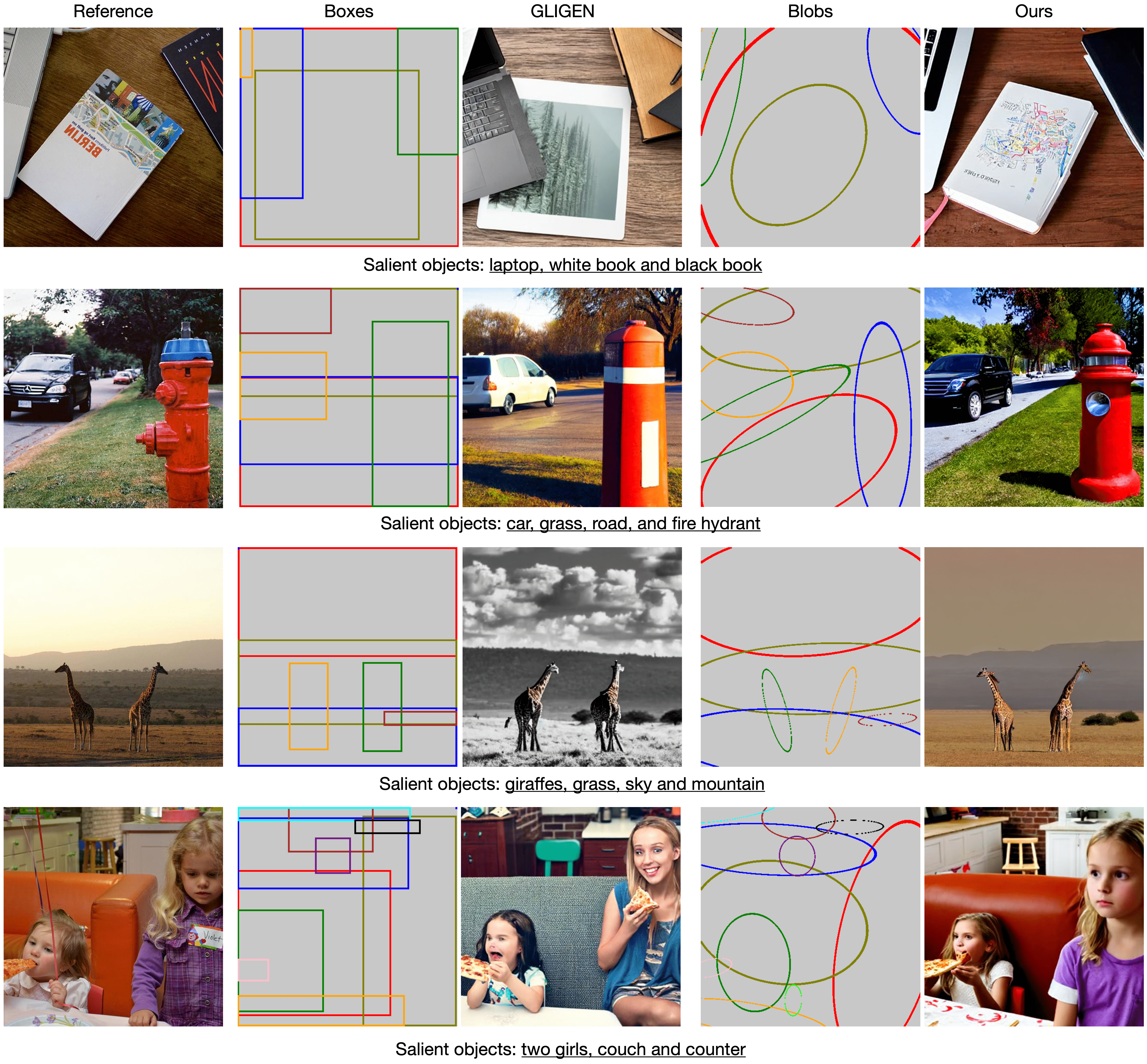

Comparison between of blob- and box-grounded generation

To further demonstrate that blob representations can capture more fine-grained information than bounding boxes, we provide qualitative results of comparing blob-grounded generation (Ours) and box-grounded generation (GLIGEN [1]). We can clearly see that generated images from blob representations can better reconstruct fine-grained details of the reference image than those from bounding boxes. For instance, in the first example, the laptop's orientation and the two books' orientation and color in the generated image from our method closely follow the reference image while GLIGEN does not. In the second example, the car's color and orientation and the grass and road's appearance and orientation from our method also more closely follow the reference image. In the third example, the two giraffes' orientation is better captured by blob representations than bounding boxes, with a direct comparison to the reference. In the last example, the two girls' appearance and pose, the couch's color, and the orientation and appearance of the counter and chair in the background are also better captured by blob representations.

More qualitative results of comparing blob-grounded generation (Ours) and box-grounded generation (GLIGEN [1]). In each row, we visualize the reference real image (Left), bounding boxes and GLIGEN generated image (Middle), blobs and our generated image (Right). We also highlight the salient objects in each example to guide the scrutinization of how the respective generated image differs from reference image. Note that images have been compressed to save memory. Better zoom in for better visualization.

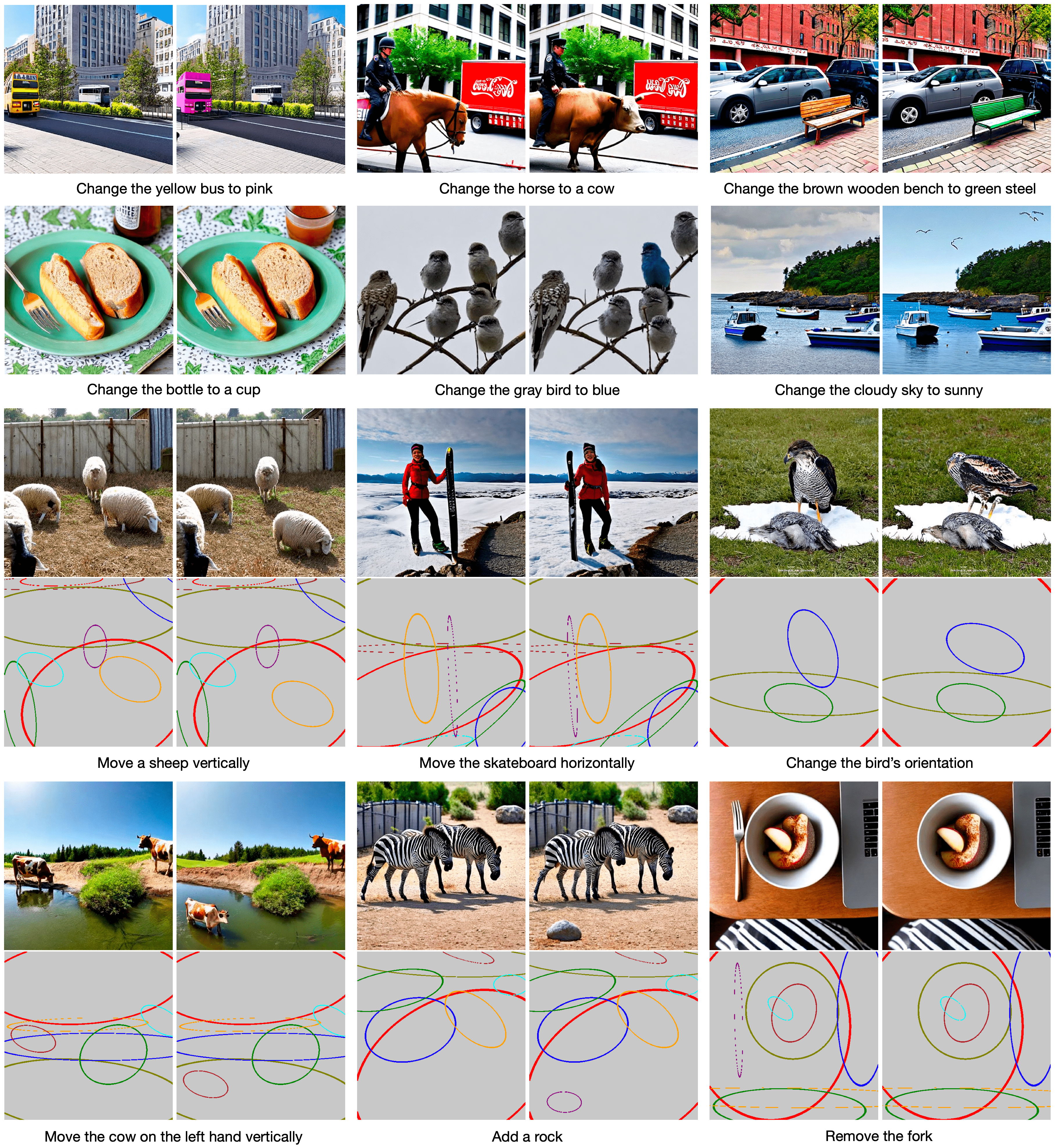

Manipulating blobs to unlock various local image editing tasks

We visualize various image editing results by manually changing a blob representation (e.g., either blob description or blob parameter) while keeping other blobs the same. Our method can enable different local editing capabilities by solely changing the corresponding blob descriptions. In particular, we can change the object color, category, and texture while keeping the unedited regions mostly the same. Furthermore, our method can also make different object repositioning tasks easily achievable by solely manipulating the corresponding blob parameters. For instance, we can move an object to different locations, change an object's orientation, and add/remove an object while also keeping other regions nearly unchanged. Note that we have not applied any attention map guidance or sample blending trick during sampling, to preserve localization and disentanglement in the editing process. Thus, these results demonstrate that a well-disentangled and modular property naturally emerges in our blob-grounded generation framework.

Various local image editing results of BlobGEN, where each example contains two generated images: (Left) original setting and (Right) after editing. The top two rows show the local editing results where we only change the blob description and since the blob parameters stay the same after editing, we do not show blob visualizations. The bottom four rows show the object reposition results where we only change the blob parameter. Note that images have been compressed to save memory. Better zoom in for better visualization.

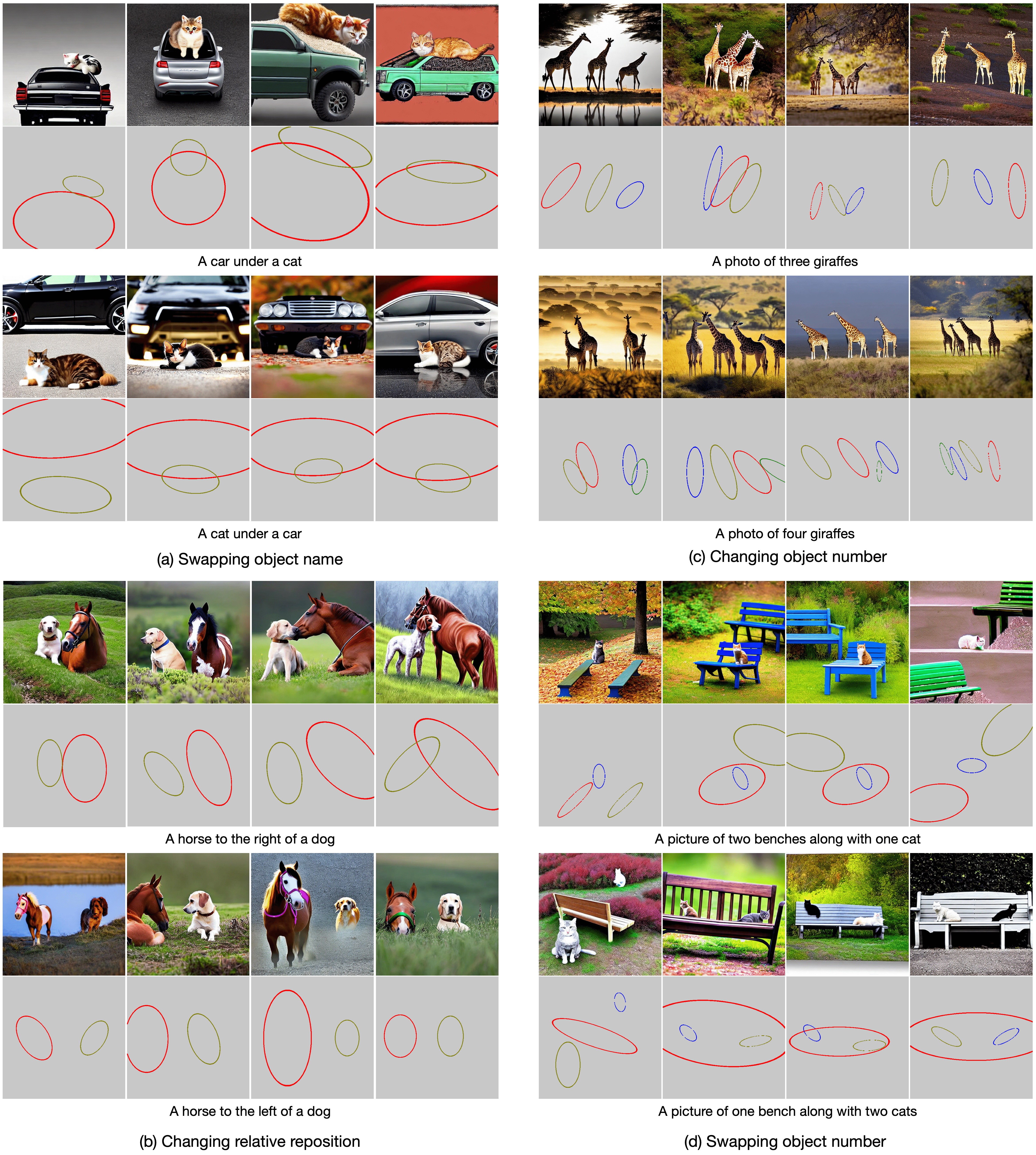

Compositional generation from blobs controlled by LLMs

Blob representations can be generated by LLMs from a global caption. To demonstrate the blob control from LLMs, we consider four cases of how LLMs understand compositional prompts for correct visual planning: (a) swapping object name ("cat" <-> "car"), (b) changing relative reposition ("left" <-> "right"), (c) changing object number ("three" <-> "four"), and (d) swapping object number ("one bench & two cats" <-> "two benches & one cat"). We can see that LLMs have the ability of capturing the subtle differences when the prompts are modified in an "adversarial" manner. Besides, LLMs can generate diverse and feasible visual layouts from the same prompt, which BlobGEN can use for robustly synthesizing correct images of high quality and diversity.

Qualitative results of blob control over using LLMs, where we consider four cases of how LLMs understand compositional prompts for correct visual planning: (a) swapping object name ("cat" <-> "car"), (b) changing relative reposition ("left" <-> "right"), (c) changing object number ("three" <-> "four"), and (d) swapping object number ("one bench & two cats" <-> "two benches & one cat"). In each example, we show diverse blobs generated by LLMs (bottom) and the corresponding images generated by BlobGEN (top) from the same text prompt. Note that images have been compressed to save memory. Better zoom in for better visualization.

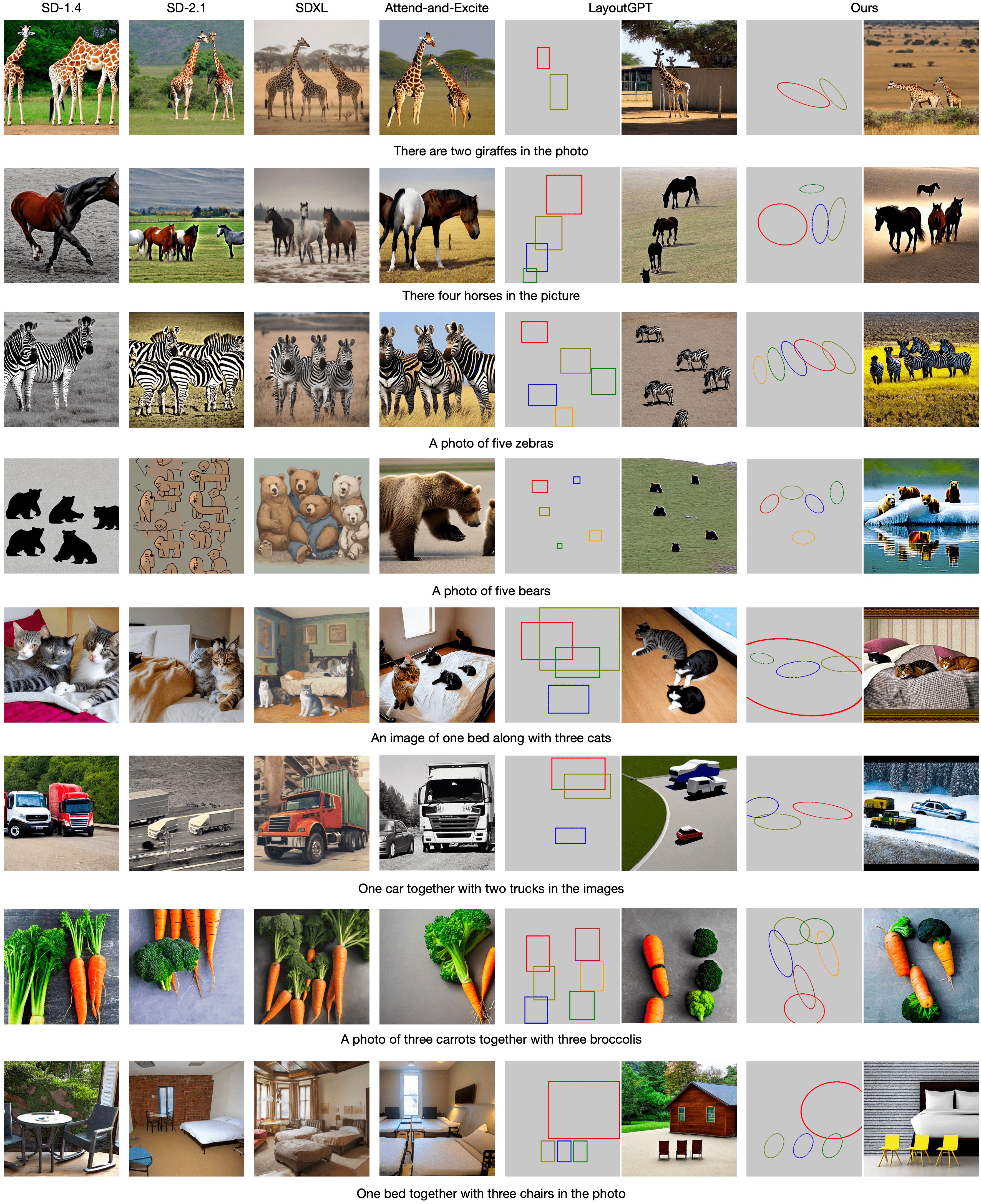

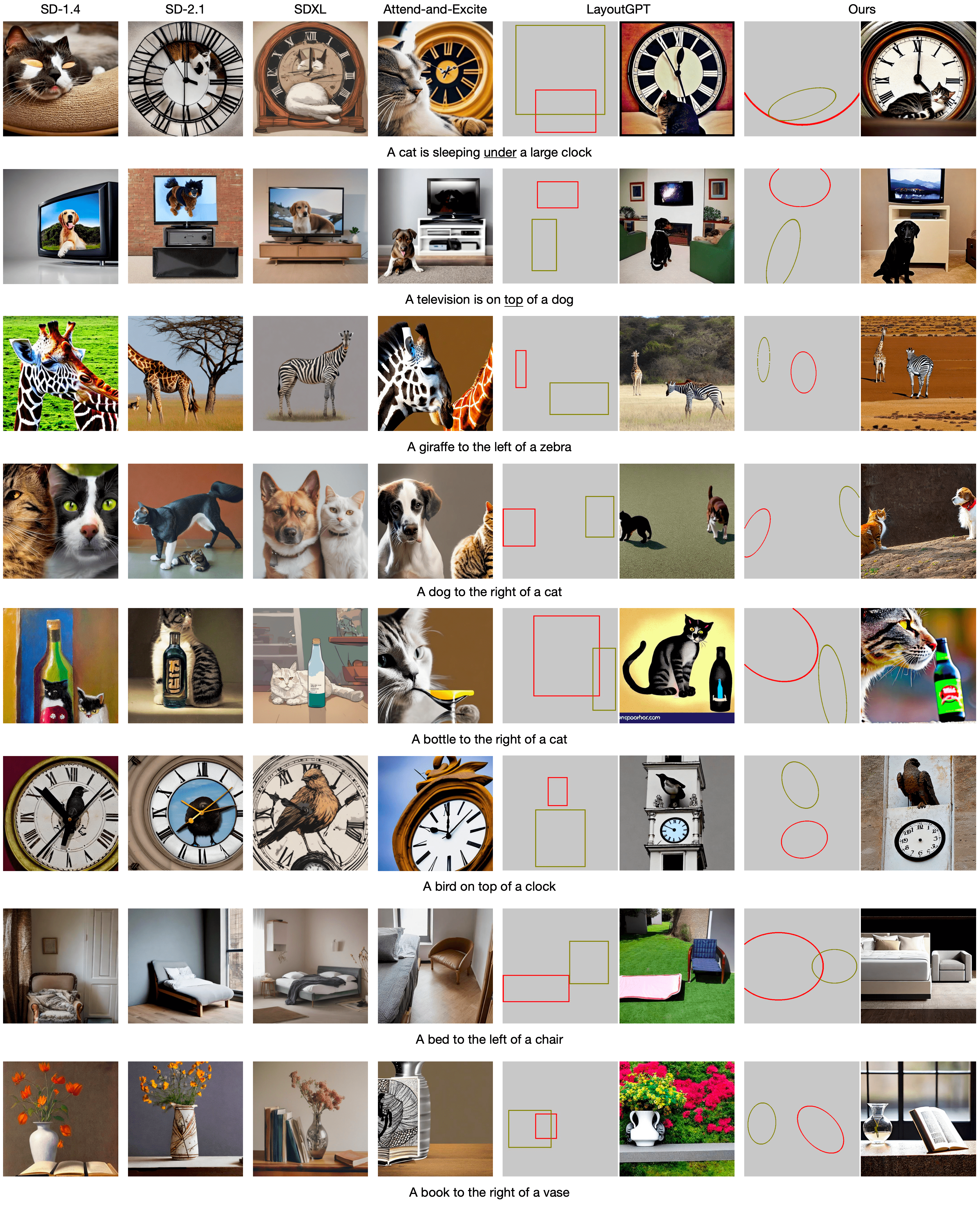

Comparison with previous methods on compositional generation

We show the numerical and spatial reasoning results of our method and previous approaches in the following two figures, respectively. In addition, we visualize the layouts inferred by GPT4 for both GLIGEN and our method. We can see that all methods without layout planning in the middle always fail in the task, including SDXL that has a large model size and more advanced training procedures, and Attention-and-Excite that has an explicit attention guidance. Compared with LayoutGPT based on GLIGEN, our method can not only generate images with better spatial and numerical correctness, but also in general has better visual quality with less ``copy-and-paste'' effect.

Qualitative results of various methods in numerical reasoning tasks on the NSR-1K benchmark [2]. In our method, given a caption, we prompt GPT4 to generate blob parameters (Left) and LLAMA-13B to generate blob descriptions (not shown in the figure), which are passed to BlobGEN to synthesize an image (Right). Note that images have been compressed to save memory. Better zoom in for better visualization.

Qualitative results of various methods in spatial reasoning tasks on the NSR-1K benchmark [2]. In our method, given a caption, we prompt GPT4 to generate blob parameters (Left) and LLAMA-13B to generate blob descriptions (not shown in the figure), which are passed to BlobGEN to synthesize an image (Right). Note that images have been compressed to save memory. Better zoom in for better visualization.

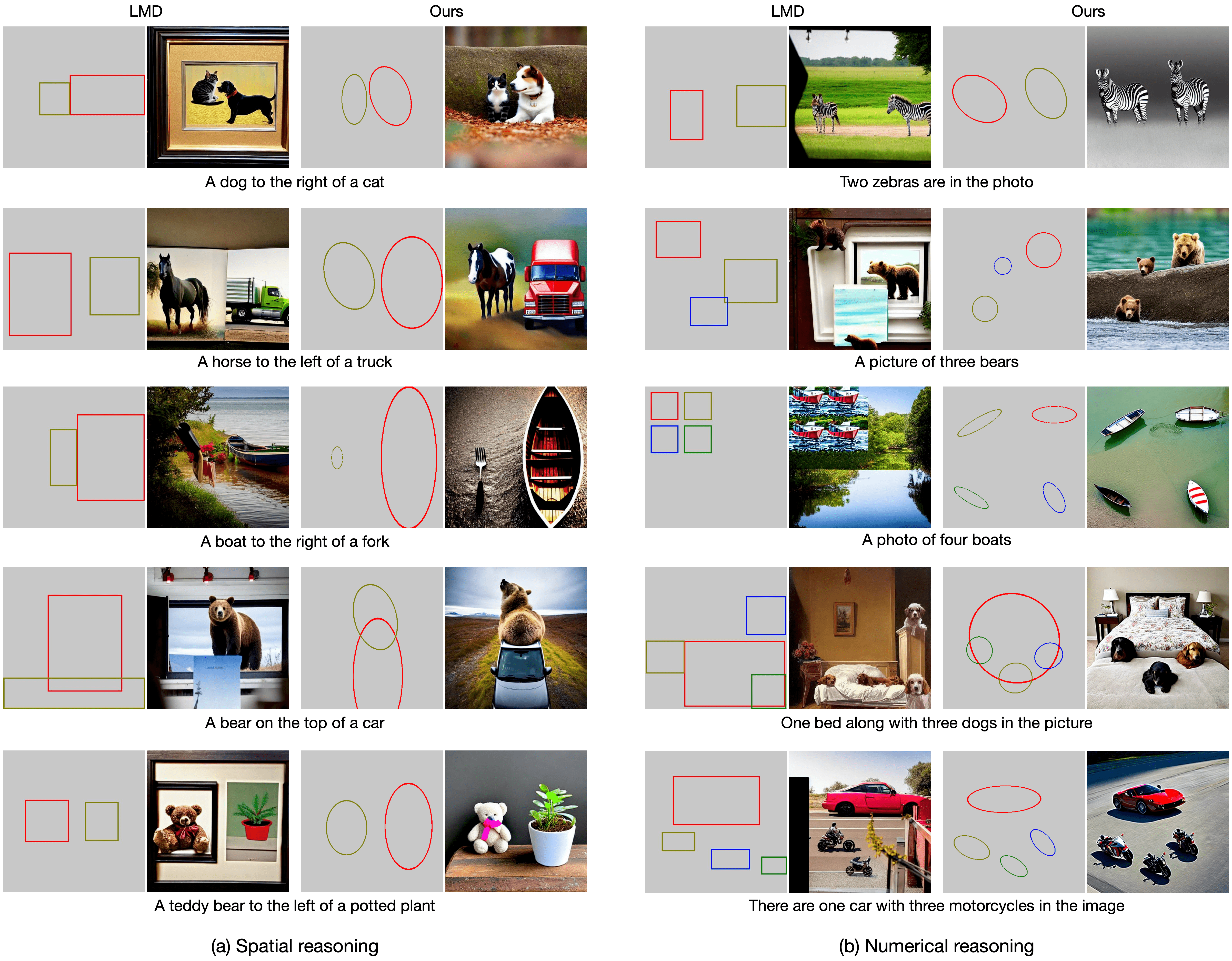

Comparison with LMD [3] using fixed in-context examples for LLMs

We qualitatively compare our method and LMD [3] on the NSR-1K benchmark. For a fair comparison, we use the same 8 fixed in-context demo examples (without retrieval) for GPT-4 to generate bounding boxes for LMD and blobs for our method, respectively. We observe that 1) for some complex examples, such as "a boat to the right of a fork" in (a) and "there are one car with three motorcycles in the image" in (b), LMD fails but our method works; 2) LMD consistently has the "copy-and-paste" artifact in its generated images (since it modifies the diffusion sampling process for compositionality), such as "a teddy bear to the left of a potted plant" in (a) and "a photo of four boats" in (b), while our generation looks much more natural; and 3) the sampling time of LMD is around 3x slower than our method.

Qualitative results of comparing our method with LMD [3] on the NSR-1K benchmark proposed by LayoutGPT [2] for spatial and numerical reasoning. In each example, we first prompt GPT-4 to generate boxes for LMD and blobs for our method, respectively, with the same 8 fixed in-context demo examples. The generated boxes and blobs are passed to LMD and BlobGEN to generate images, respectively. Note that images have been compressed to save memory. Better zoom in for better visualization.

References

[1] Y. Li, et al. "GLIGEN: Open-set grounded text-to-image generation.", CVPR 2023.

[2] W. Feng, et al. "LayoutGPT: compositional visual planning and generation with large language models.",

NeurIPS 2023.

[3] L. Lian, et al. "LLM-grounded Diffusion: Enhancing Prompt Understanding of Text-to-Image Diffusion

Models with Large Language Models.", TMLR 2023.

Paper

Compositional Text-to-Image Generation with Dense Blob Representations

Weili Nie, Sifei Liu, Morteza Mardani, Chao Liu, Benjamin Eckart, Arash Vahdat

Citation

@inproceedings{nie2024BlobGEN,

title={Compositional Text-to-Image Generation with Dense Blob Representations},

author={Nie, Weili and Liu, Sifei and Mardani, Morteza and Liu, Chao and Eckart, Benjamin and Vahdat, Arash},

booktitle = {International Conference on Machine Learning (ICML)},

year={2024}

}